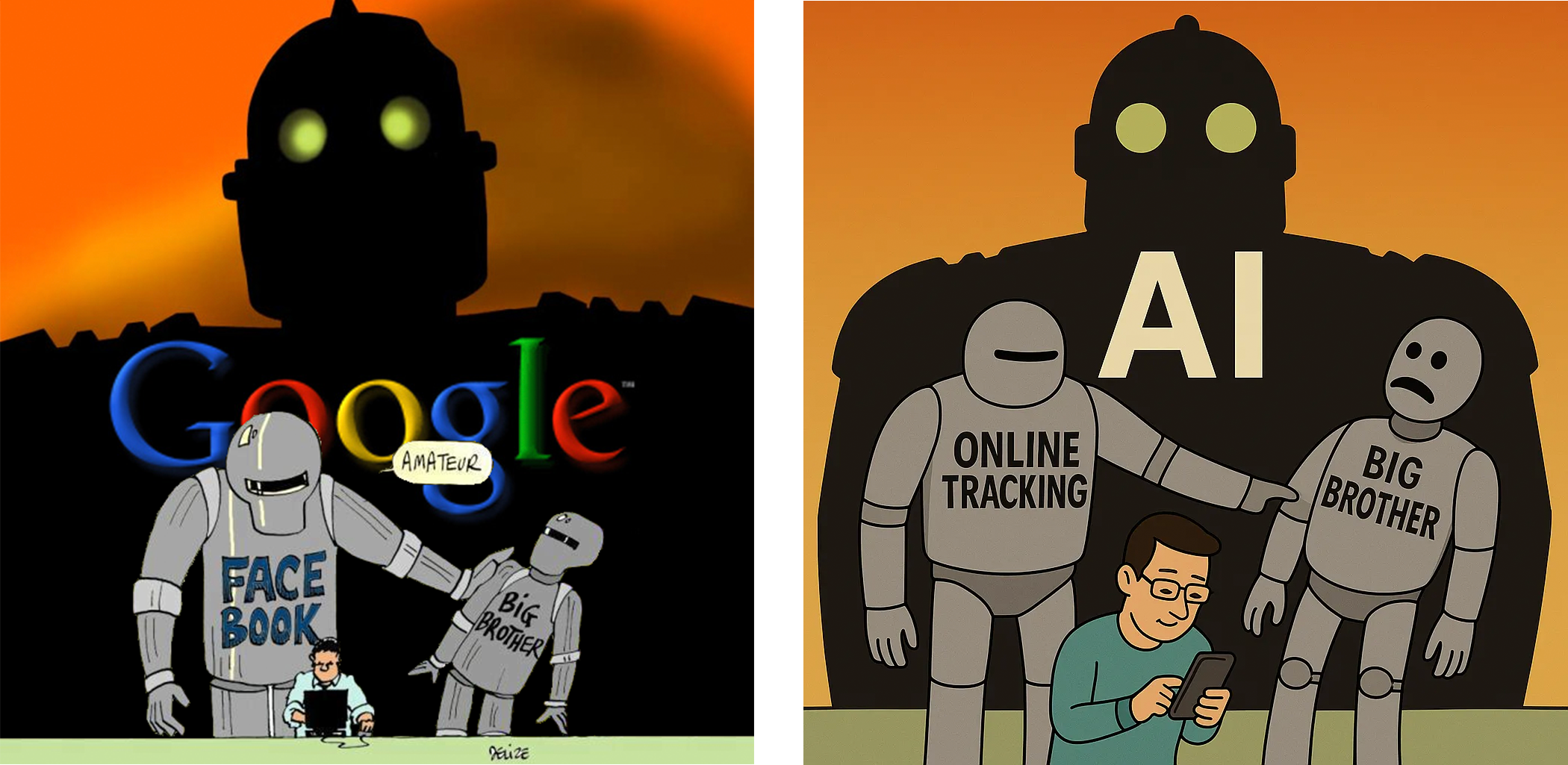

AI surveillance should be banned while there is still time.

All the same privacy harms with online tracking are also present with AI, but worse.

While chatbot conversations resemble longer search queries, chatbot privacy harms have the potential to be significantly worse because the inference potential is dramatically greater. Longer input invites more personal information to be provided, and people are starting to bare their souls to chatbots. The conversational format can make it feel like you’re talking to a friend, a professional, or even a therapist. While search queries reveal interests and personal problems, AI conversations take their specificity to another level and, in addition, reveal thought processes and communication styles, creating a much more comprehensive profile of your personality.

This richer personal information can be more thoroughly exploited for manipulation, both commercially and ideologically, for example, through behavioral chatbot advertising and models designed (or themselves manipulated through SEO or hidden system prompts) to nudge you towards a political position or product. Chatbots have already been found to be more persuasive than humans and have caused people to go into delusional spirals as a result. I suspect we’re just scratching the surface, since they can become significantly more attuned to your particular persuasive triggers through chatbot memory features, where they train and fine-tune based on your past conversations, making the influence much more subtle. Instead of an annoying and obvious ad following you around everywhere, you can have a seemingly convincing argument, tailored to your personal style, with an improperly sourced “fact” that you’re unlikely to fact-check or a subtle product recommendation you’re likely to heed.

That is, all the privacy debates surrounding Google search results from the past two decades apply one-for-one to AI chats, but to an even greater degree. That’s why we (at DuckDuckGo) started offering Duck.ai for protected chatbot conversations and optional, anonymous AI-assisted answers in our private search engine. In doing so, we’re demonstrating that privacy-respecting AI services are feasible. But unfortunately, such protected chats are not yet standard practice, and privacy mishaps are mounting quickly. Grok leaked hundreds of thousands of chatbot conversations that users thought were private. Perplexity’s AI agent was shown to be vulnerable to hackers who could slurp up your personal information. Open AI is openly talking about their vision for a “super assistant” that tracks everything you do and say (including offline). And Anthropic is going to start training on your chatbot conversations by default (previously the default was off). I collected these from just the past few weeks!

It would therefore be ideal if Congress could act quickly to ensure that protected chats become the rule rather than the exception. And yet, I’m not holding my breath because it’s 2025 and the U.S. still doesn’t have a general online privacy law, let alone privacy enshrined in the Constitution as a fundamental right, as it should be. However, there does appear to be an opening right now for AI-specific federal legislation, despite the misguided attempts to ban state AI legislation.

Time is running out because every day that passes further entrenches bad privacy practices. Congress must move before history completely repeats itself and everything that happened with online tracking happens again with AI tracking. AI surveillance should be banned while there is still time. No matter what happens, though, we will still be here, offering protected services, including optional AI services, to consumers who want to reap the productivity benefits of online tools without the privacy harms.

Informative.

This is what I most wanted to be protected. However, I can't see a way to do it. So, my idea is this: since we cannot hide our personal light, what about lighting the world with sunlight? I mean, would it be logical to create a browser with standardized user information, so that even if they collect the data, it won't be traceable to any individual user? They'd be blinded by an abundance of light rather than darkness... if this is exist, I am not aware of it.

standard: constantly standard or systematically standard or actively changing systematically standard.