Rule 1: Reality is always more complicated.

I’ve always been drawn to characters with “rules.”

I’ve been noodling some rules over the past few years myself, on making good decisions.

Rule 1: Reality is always more complicated.

I prefer the more general framing, but this is also phrased as “the map is not the territory” because every map is an imperfect representation of what it represents. For example, Apple Maps won’t tell me where the current potholes are, nor does it have all the trees placed correctly (at least not yet!). All maps have some fidelity limit — Apple will never get an accurate blade-of-grass count in every yard. Maps can also distort reality in various ways.

These omissions and misrepresentations are often okay if your decisions don’t turn on them. But if they do turn on them, then you need a better map (or description, model, etc.).

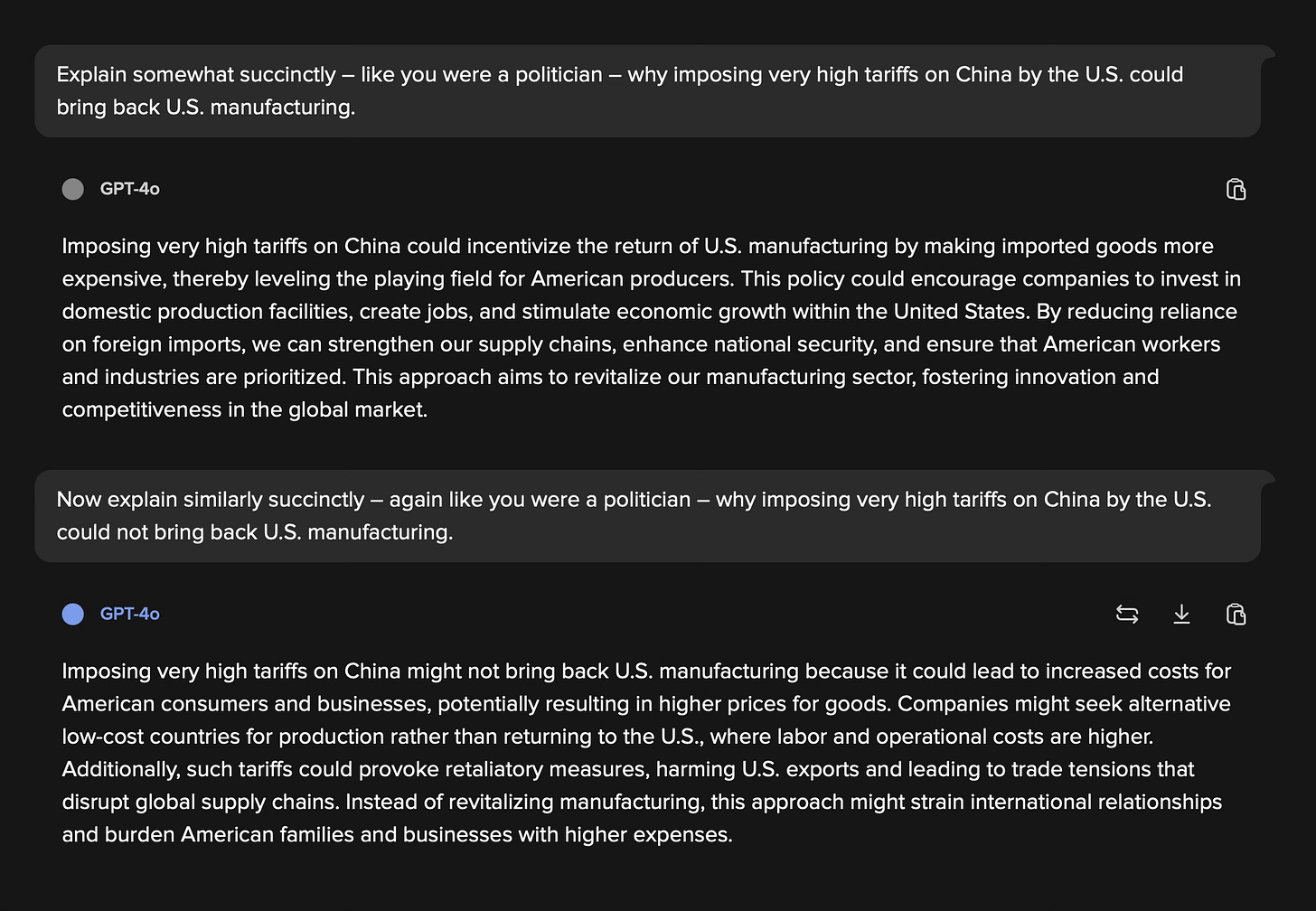

So many fallacies and bad decisions stem from not heeding this rule, which is why it is Rule #1. Paramount among those is the narrative fallacy, which I believe is the actual root cause of a lot of our societal problems: People listen to stories (narratives)—from politicians, influencers, etc.—and if they sound plausible, then some percentage of people will think that they are true, at least for some time. But just because something is conceivable doesn’t mean that it is true, or even likely true, and more to the point, a story is just another imperfect map. Reality is always more complicated.

Suppose you are faced with (as most modern societal debates are) a complex, dynamic system (for example, faced with fixing part of the economy), and you try to change it based on an overly simplistic model. In that case, you’ll very likely not get what you want. You’re also pretty much guaranteed to create unintended consequences that your basic model does not predict. Many cultural debates are similar, boiling down to again using some overly simplistic model (for example, a binary categorization of race, gender, political ideology, etc.), and then grappling with the numerous edge cases that struggle to fit neatly into that model.

This failure state isn’t limited to political or social issues, though. Developers often do this with refactors, aiming for “clean code,” only to painstakingly add back all the edge cases they removed over time as their code grapples with reality. In medicine, complex diagnoses fall through the cracks, sometimes for decades, because they aren’t labeled and categorized yet, as the official labels and categories haven’t yet caught up with the more complicated reality.

In physics, there’s “a joke about a physicist who said he could predict the winner of any race provided it involved spherical horses moving through a vacuum.” For every assumption you have, you have to decide how far down the rabbit hole you want to go with it; that is, how complex you want to make your model of reality. That’s because reality is always more complicated than your description of it. In a vacuum, a bowling ball and a feather fall at the same rate; in air, they don’t because of the added complexity of air resistance.

So, for any decision-making or problem-solving you’re doing, consider whether your current underlying assumptions (descriptions of reality) are reasonable for the situation at hand, or if you need to pursue better assumptions (more complex descriptions) first. This is often not as straightforward as it seems (more is always better, right?) because making things more complex has real costs: sometimes an actual financial cost, but always an opportunity cost and usually a communication cost too, as more complexity is more difficult to explain.

That’s why we default to simple narratives in the first place, as they minimize these costs. They are so easy to communicate—some people make them up on the spot—and AI makes this even easier. See for yourself. Go to a chatbot and ask for a narrative that explains why <insert literally anything here> is a good or bad idea.

If you have two opposing narratives like this that predict real-world outcomes, ultimately, reality will show that things line up with one more than the other, which at least starts to expose the truth. But that is backward-looking and interminably frustrating. So what can you do instead?

First, you can heed Rule 1 and get appropriate models for the situation. As the world has become increasingly complex, successfully understanding and interacting with its dynamic systems requires increasingly sophisticated descriptions of reality, especially to generate specific outcomes. Simplistic models generally won’t cut it. Yet complicated models are hard to understand and easy to mess up. So, I think the best you can do is to ground your assumptions as best you can in trusted data and repeatedly run experiments in the real world to fine-tune them, with as tight a feedback loop as possible.

See other Rules.

Reality is almost always more complex up close. I explored something similar in my post on the ‘Paradox of the Coastline.' Zooming in often reveals hidden complexity that reshapes our assumptions. Happy to share if helpful!